FDA relax oversight of AI devices – Introduction

FDA relaxed oversight of AI devices is now a major regulatory development shaping the future of digital health and medical technology worldwide. Artificial intelligence is increasingly embedded in healthcare systems, from diagnostics and imaging to patient monitoring and clinical decision support. As adoption accelerates, regulatory agencies face growing pressure to modernize oversight models that were originally designed for physical medical devices.

The recent policy shift by the Food and Drug Administration signals a move toward a more flexible, risk-based regulatory framework. This change does not eliminate FDA authority but adjusts how and where oversight is applied. Because FDA policy often sets a global benchmark, the implications extend well beyond the United States.

This article provides a data-driven, detailed analysis of why the FDA relaxed oversight of AI devices, which products are affected, how companies and leaders are responding, and what this means for patients, providers, and investors.

What Does FDA Relaxed Oversight of AI Devices Actually Mean

When regulators say the FDA relaxed oversight of AI devices, they are referring primarily to expanded use of enforcement discretion. Enforcement discretion means the FDA may choose not to actively enforce certain regulatory requirements for products it considers low risk to patient safety.

In practical terms, this approach allows some AI-enabled software tools to enter or remain in the market without formal premarket clearance, provided they meet specific criteria. These criteria include transparency, clinician involvement, and limited clinical risk. The FDA continues to regulate high-risk AI systems that diagnose or treat patients autonomously.

According to Jeff Shuren, the FDA’s goal is to focus oversight on technologies where errors could cause direct patient harm. He has explained in public forums that regulatory resources should prioritize higher-risk devices rather than low-risk support tools.

FDA Relax Oversight of AI Devices – Why the FDA Changed Its Approach to AI Devices

Rapid Expansion of AI in Healthcare

AI adoption in healthcare has accelerated faster than traditional regulatory frameworks can accommodate. Hospitals now use AI for workflow automation, predictive analytics, imaging support, and patient risk stratification. Reviewing every software update using legacy approval pathways has proven impractical.

Innovation and Global Competition

The United States faces increasing competition from Europe and Asia in digital health innovation. Regulatory delays can discourage investment and slow product development. Industry stakeholders have consistently urged regulators to adopt more adaptive oversight models.

Risk-Based Regulation Philosophy

The FDA has emphasized that not all AI tools pose equal risk. A wellness app powered by AI does not require the same level of scrutiny as an autonomous diagnostic system. The relaxed oversight approach reflects this differentiation.

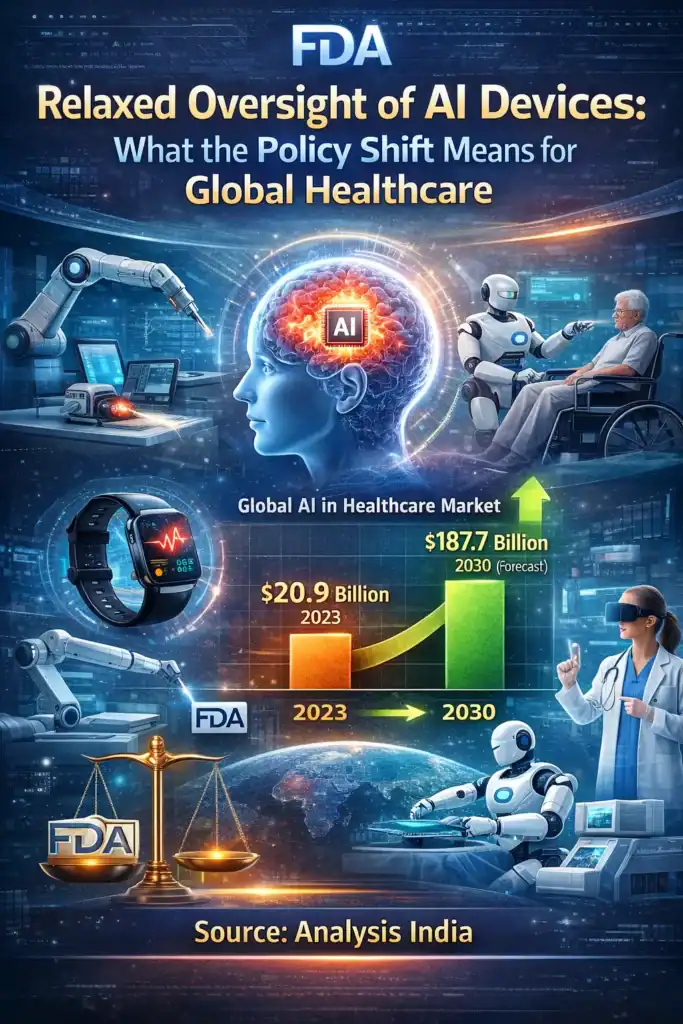

Market Size and Growth of AI in Healthcare

The policy shift is occurring against the backdrop of strong market growth.

Global AI in Healthcare Market

| Metric | Value |

|---|---|

| Market Size 2023 | USD 20.9 billion |

| Expected Market Size 2030 | USD 187.7 billion |

| Forecast Period | 2024 to 2030 |

| Source | Grand View Research |

Grand View Research states that rising adoption of AI driven diagnostics and digital health platforms is a major growth driver.

Investment Trends

According to Statista, global investment in healthcare AI startups crossed USD 16 billion in 2022, reflecting strong investor confidence in the sector.

FDA relax oversight of AI devices – Clinical Decision Support Tools

Clinical decision support tools represent one of the most important categories affected by the FDA relaxed oversight of AI devices. These software systems analyze patient data such as lab results, medical history, imaging, and vital signs to generate insights that assist healthcare professionals in making informed decisions.

Unlike autonomous systems, clinical decision support software does not replace clinician judgment. Instead, it provides recommendations, alerts, or risk assessments while allowing physicians to independently evaluate the underlying logic. This transparency is central to why these tools are considered lower risk by the FDA.

Under the relaxed oversight framework, many clinical decision support tools no longer require formal FDA clearance if clinicians can understand how the recommendation was generated and are not obligated to follow it. This change significantly lowers regulatory barriers for hospitals and technology providers while preserving professional accountability.

FDA Relax Oversight of AI Devices – Wearable and Wellness AI Devices

Wearable health devices increasingly rely on AI algorithms to deliver insights related to heart rate, sleep patterns, physical activity, and stress levels. These products are often positioned as wellness tools rather than medical devices.

Companies such as Apple and Fitbit integrate AI into consumer health products. Because these devices avoid diagnostic claims, they typically fall under enforcement discretion.

The FDA’s approach allows manufacturers to innovate quickly while maintaining safeguards against misleading health claims.

AI in Medical Imaging and Diagnostics Support

AI is widely used in radiology and pathology to highlight areas of concern for clinician review. These systems improve efficiency by prioritizing cases or flagging anomalies.

If the AI does not provide a final diagnosis and instead supports professional review, it may qualify for reduced oversight. This distinction is critical in understanding how the FDA classifies AI risk.

Company Adoption and Industry Response

Medtronic

Medtronic uses AI across diabetes management and cardiac monitoring platforms.

Geoff Martha has stated that software driven healthcare requires regulatory models that support continuous improvement rather than static approvals. He has emphasized that patient safety must remain central even with regulatory flexibility.

Google Health

Google Health develops AI for imaging and population health analysis.

Former head David Feinberg has publicly stated that regulatory clarity enables responsible AI deployment and improves clinician trust.

Philips Healthcare

Philips applies AI across imaging and patient monitoring. Company leadership supports risk-based regulation that distinguishes between assistive and autonomous systems.

Expert Concerns and Risk Considerations

While industry leaders and technology companies have broadly welcomed the FDA relaxed oversight of AI devices, several healthcare experts and policy analysts have raised important concerns. Their primary argument is that regulatory flexibility should not reduce accountability or weaken patient safety safeguards.

One major concern relates to algorithmic bias. AI systems are trained on historical data, and if that data lacks diversity, the system may produce inaccurate or unequal results across different populations. Eric Topol has repeatedly highlighted that many healthcare AI tools perform well in controlled environments but show variability when deployed across real-world clinical settings. According to him, relaxed oversight increases the responsibility on developers to ensure fairness, transparency, and continuous validation.

Another concern is overreliance on AI outputs. Even when AI tools are designed to support clinicians rather than replace them, there is a risk that busy healthcare environments may encourage unquestioned reliance on algorithmic recommendations. Without strong internal governance, hospitals may unintentionally elevate AI suggestions to decision-making authority, which could undermine clinical judgment.

Transparency and Explainability Challenges

Transparency remains one of the most debated issues in AI regulation. Many AI models, particularly those based on deep learning, operate as black boxes. This means clinicians may see a recommendation but not fully understand how it was generated.

The FDA has indicated that explainability is a key factor in determining whether an AI tool qualifies for relaxed oversight. Tools that clearly show data inputs, logic pathways, or confidence levels are considered lower risk. However, experts argue that explainability standards are still evolving and may differ significantly across vendors.

Without regulatory review, transparency becomes largely self-regulated. This raises concerns about consistency and quality across the market. Healthcare providers must therefore demand clear documentation and validation data from AI vendors before deployment.

FDA Relax Oversight of AI devices – Legal and Liability Implications

The FDA relaxed oversight of AI devices also introduces complex legal questions. If an AI system contributes to a medical error, determining responsibility can be challenging. Liability may involve software developers, healthcare institutions, clinicians, or data providers.

In traditional medical device regulation, FDA approval often provides a degree of legal clarity. Under enforcement discretion, that clarity may be reduced. Legal experts suggest that hospitals and vendors should clearly define responsibility through contracts, risk-sharing agreements, and internal protocols.

As AI adoption grows, courts may increasingly rely on case law rather than regulatory precedent to resolve disputes related to AI-driven clinical decisions.

FDA Safeguards That Still Apply

Despite the policy shift, the FDA has made it clear that oversight has not disappeared. The agency continues to monitor AI devices through indirect but meaningful mechanisms.

High-risk AI devices that diagnose, treat, or operate autonomously are still subject to full regulatory review. Developers of lower-risk AI tools are expected to implement strong quality management systems, monitor performance continuously, and report adverse events when they occur.

The FDA has also emphasized the importance of real-world evidence, which refers to data collected from actual clinical use rather than premarket trials. This approach allows regulators to assess long-term performance, detect emerging risks, and intervene when necessary.

Global Regulatory Implications

The FDA’s approach to AI oversight has global significance. Many regulatory agencies worldwide view FDA policy as a reference point when shaping their own frameworks. A move toward risk-based, adaptive regulation may influence healthcare regulators in Europe, Asia, and emerging markets.

For multinational companies, this trend supports regulatory harmonization. When oversight models align across regions, companies can deploy AI solutions more efficiently and consistently. However, differences in ethical standards and data protection laws may still create regional challenges.

Impact on Healthcare Providers

Healthcare providers stand at the center of this regulatory shift. With faster access to AI tools, hospitals and clinics may benefit from improved efficiency, better resource allocation, and enhanced clinical support.

At the same time, providers assume greater responsibility for evaluating AI performance. This includes validating algorithms, training staff, monitoring outcomes, and ensuring ethical use. Many healthcare systems are now establishing internal AI governance committees to oversee adoption and usage.

The success of AI in clinical environments will depend not only on technology quality but also on organizational readiness.

Impact on Patients and Public Trust

For patients, the FDA relaxed oversight of AI devices may lead to greater access to digital health tools and personalized care insights. AI has the potential to improve early detection, streamline care pathways, and reduce clinician workload.

However, patient trust remains essential. Patients must feel confident that AI tools are accurate, fair, and used responsibly. Clear communication about how AI supports care decisions can help maintain transparency and trust.

Public confidence will depend on how well healthcare systems balance innovation with accountability.

Long-Term Outlook for AI Device Regulation

Looking ahead, experts expect AI regulation to become increasingly dynamic. Instead of one-time approvals, oversight may rely on continuous performance monitoring, adaptive standards, and collaborative governance.

The FDA relaxed oversight of AI devices appears to signal a long-term transition rather than a temporary experiment. Regulators are acknowledging that software-driven healthcare requires flexible frameworks that evolve alongside technology.

The effectiveness of this approach will depend on sustained cooperation between regulators, developers, healthcare providers, and researchers.

Conclusion

The decision that FDA relaxed oversight of AI devices represents a fundamental shift in how healthcare innovation is governed. By focusing regulatory attention on risk rather than technology type, the FDA aims to support innovation while preserving patient safety.

This approach creates new opportunities for faster deployment of AI tools but also places greater responsibility on industry and healthcare institutions. Transparency, ethical design, and real-world monitoring will be critical to long-term success.

As AI continues to reshape healthcare delivery, regulatory models must evolve in parallel. The FDA’s policy shift is an important step in that direction, but its real impact will be determined by how responsibly AI is developed and used in practice.

Frequently Asked Questions (FAQs)

What does FDA relaxed oversight of AI devices mean

FDA relaxed oversight of AI devices means the US regulator is applying lighter regulatory control to certain low risk artificial intelligence based healthcare tools. These tools usually support clinicians rather than make final medical decisions. High risk AI devices are still fully regulated.

Which AI devices are affected by the FDA relaxed oversight of AI devices

The FDA policy mainly affects clinical decision support software, AI enabled wellness tools, wearable health devices, and some medical imaging support software. These products qualify only if clinicians can review and understand the AI recommendations.

Are AI medical devices still regulated by the FDA

Yes, AI medical devices are still regulated by the Food and Drug Administration. The relaxed oversight applies only to low risk tools. AI systems that diagnose, treat, or operate autonomously continue to require strict FDA approval.

Why did the FDA relax oversight of AI devices

The FDA relaxed oversight of AI devices to support innovation and address the rapid growth of digital health technologies. Traditional regulatory models are slow for software that updates frequently. A risk based approach allows faster access to safe AI tools while focusing oversight on higher risk products.

Does FDA relax oversight of AI devices increase patient safety risks

Relaxed oversight does not automatically increase risk, but it shifts more responsibility to companies and healthcare providers. Experts stress the need for transparency, real world monitoring, and ethical AI development to maintain patient safety.

How does FDA relax oversight of AI devices decision impact healthcare providers

Healthcare providers may gain faster access to AI tools that improve efficiency and clinical support. However, providers must take greater responsibility for validating AI performance, training staff, and monitoring outcomes within their organizations.

What does this mean for patients

Patients may benefit from improved access to digital health tools, personalized insights, and faster innovation. Trust remains critical, and patients should be informed about how AI supports medical decisions rather than replaces doctors.

Will other countries follow the FDA AI oversight model

Many global regulators closely observe FDA relax oversight of AI devices. policy The risk based approach to AI oversight may influence regulatory frameworks in Europe and Asia, although local laws and data protection rules will still apply.

Is this FDA policy change permanent

The FDA has not stated that the relaxed oversight is permanent. It is part of an evolving regulatory approach. Oversight models may continue to change as AI technology and real world evidence develop.

What should AI healthcare companies do under relaxed oversight

AI healthcare companies should invest in strong internal validation, transparency, bias testing, and real world performance monitoring. Voluntary compliance and ethical governance are essential to maintain trust and reduce long term regulatory risk.